Adobe Flash can make data difficult to extract. This tutorial will teach you how to find and examine raw data files that are sent to your web browser, without worrying how the data is visually displayed.

For example, the data displayed on this Recovery.gov Flash map is drawn from this text file, which is downloaded to your browser upon accessing the web page.

Inspecting your web browser traffic is a basic technique that you should do when first examining a database-backed website.

Background

In September 2008, drug company Cephalon pleaded guilty to a misdemeanor charge and settled a civil lawsuit involving allegations of fraudulent marketing of its drugs. It is required to post its payments to doctors on its website.

Cephalon’s report is not downloadable and the site disables the mouse’s right-click function, which typically brings up a pop-up menu with the option to save the webpage or inspect its source code. The report is inside a Flash application and disables copying text with Ctrl-C.

We asked the company why it chose this format. Company spokeswoman Sheryl Williams wrote in an e-mail: “We can appreciate the lack of ease in aggregating data or searching based on other parameters, but this posting was not required to do these things. We believe the [Office of the Inspector General]’s requirement was intended for the use of patients, who can easily look up their [health care provider] in our system.”

Software to Get

- Firefox

- The Firebug plugin, to monitor your browser’s web traffic

- Ruby, the scripting language

- Nokogiri, an XML parsing library for Ruby

Instead of using Firebug, you can also use Safari’s built-in Activity window, or Chrome’s Developer Tools, for the inspection part. To parse the result, we use Ruby and Nokogiri, which is an essential library for any kind of web scraping with Ruby.

A Series of Tubes…and Files

While the site makes the data difficult to download, it’s not impossible. In fact, it’s fairly easy with some understanding of web browser interaction. The content of a web page doesn’t consist of a single file. For instance, images are downloaded separately from the webpage’s HTML.

Flash applications are also discrete files, and sometimes they act as shells for data that come in separate text files, all of which is downloaded by the browser when visiting Cephalon’s page. So, while Cephalon designed a Flash application to format and display its payments list, we can just view the list as raw text.

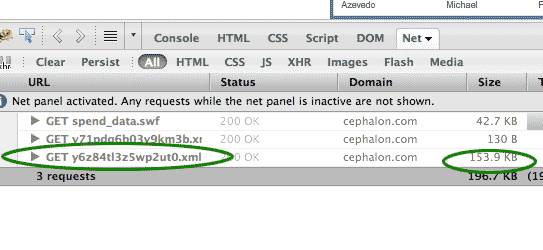

Firebug can tell you what files your browser is receiving. In Firefox, open up Firebug by clicking on the bug icon on the status bar, then click on the Net panel. This panel shows every file that was received by your web browser when it accessed Cephalon’s page.

We know we’re looking for the Flash file, so let’s look for that first. Flash applets use the suffix swf. The only one listed is spend_data.swf. In Firebug, right-click on the listing, copy the url, and paste it into a new browser window:

http://www.cephalon.com/Media/flash/spend_data-2009.swf

You can see the Flash file in its context here: http://www.cephalon.com/our-responsibility/relationships-with-healthcare-professionals/archive/2009-fees-for-services.html.

You’ll get a larger-screen view of the list, though that doesn’t really help our data analysis. As you may have noticed in the Firebug Net panel, spend_data.swf is less than 45 kilobytes, which doesn’t seem large enough to contain the entire list of doctors and payments. So where is the actual data stored?

Sniffing Out the Data

Here’s how find it: First, clear your cache in Firefox by going to Tools->Clear Recent History and selecting Cache. With Firebug still open, refresh the browser window that has spend_data.swf open.

Firebug’s window tells us that besides receiving spend_data.swf, our browser downloaded two xml files. One of these is more than 100 kilobytes, which is about what we would expect for an XML-formatted list of a few hundred doctors.

Now right-click on the file in Firebug and select Open in New Tab, and then View Page Source by right-clicking in the new tab. You should see a text file full of entries like the following:

<row> <value>100001</value> <value>$ 100,001 - $ 110,000</value> <value>14057447</value> <value>Rizzieri, David A</value> <value>Rizzieri</value> <value>David</value> <value>MD</value> <value>Durham, NC</value> <value>102400</value> <value>Honoraria</value> </row>

That’s what we were looking for: a well-structured list of the doctors and what they got paid. Now it’s a simple matter of using an xml parser, like Ruby’s Nokogiri, to iterate through each “row” node and pick up the essential values.

Parsing with Nokogiri

The following is a brief example of Nokogiri’s most basic methods. It assumes you have Ruby and Nokogiri installed, and a little familiarity of basic programming.

The two Nokogiri methods we’re most interested in are:

- css – this lets us select tags inside XML and HTML documents. In this example, we want the value and row tags.

- text – with each element returned by css, text will give us the actual characters enclosed by the element’s tags.

Each row represents a record, and each value represents a datafield, like name and location. So, we simply want to read each row and select the values we’re interested in.

require 'rubygems'

require 'nokogiri'

# you should set the following filename variable to whatever the name of the xml file is, either online,

# or if you've downloaded it on to your hard drive

filename = 'cephalon-data.xml'

file = Nokogiri::XML(open(filename))

# use 'css' to select each 'row' and iterate through each one

file.css('row').each do |row|

# select each value in the row with 'css'

values = row.css('value')

# The 4th, 8th, and 9th values contain the doctor's name, city, and payment amount, respectively

# (remember that Ruby arrays start their count at zero)

# put the three value elements' text in an array, join them with a tab-character, and print the line

# to the screen

puts [values[3].text, values[7].text, values[8].text].join("\t")

end

Here’s a compact variation of the above code that writes the result into a file:

require 'rubygems'

require 'nokogiri'

filename = 'cephalon-data.xml'

File.open('cephalon-output.txt', 'w'){ |output_file|

Nokogiri::XML(open(filename)).css('row').map{|row| row.css('value').map{|v| v.text}}.each do |values|

output_file.puts( [values[3], values[7], values[8]].join("\t") )

end

}

So, what first appeared to be the most difficult report to parse ends up being the easiest. Whether you’re dealing with a Flash application or a HTML database-backed website, your first step should be to see what text files your browser receives when accessing the page.

The Dollars for Docs Data Guides

Introduction: The Coder’s Cause – Public records gathering as a programming challenge.

- Using Google Refine to Clean Messy Data – Google Refine, which is downloadable software, can quickly sort and reconcile the imperfections in real-world data.

- Reading Data from Flash Sites – Use Firefox’s Firebug plugin to discover and capture raw data sent to your browser.

- Parsing PDFs – Convert made-for-printer documents into usable spreadsheets with third-party sites or command-line utilities and some Ruby scripting.

- Scraping HTML – Write Ruby code to traverse a website and copy the data you need.

- Getting Text Out of an Image-only PDF – Use a specialized graphics library to break apart and analyze each piece of a spreadsheet contained in an image file (such as a scanned document).